Discover the Cutting Edge with the Graphics and Vision Group!

Join the GV team at Stack B from 12pm to 5pm on 26th September for a behind-the-scenes lab tour and explore their groundbreaking work in visual computing! The Graphics & Vision Group at Trinity College Dublin specializes in computer graphics, virtual human animation, augmented and virtual reality, and computer vision. Their research drives innovative solutions with diverse, real-world applications, from real-time rendering to complex simulations.

Beyond the Headset: The Science of Captivating VR Stories.

Dive into the heart of virtual reality! Have you ever wondered what makes a story truly captivating? It's not just about what you see, but how you feel and how deeply you connect. At European Researchers' Night, we're revising a tool that lets us measure exactly that: the Narrative Engagement Scale (NES). This isn't just about whether a VR experience is comfortable; it's about understanding your sense of presence, your emotional connection, and what drives you to keep exploring. We're inviting you to be part of our exciting research and help us shape the future of storytelling. Come join our live demonstration, immerse yourself in a virtual world, and see firsthand how we're unlocking the secrets of truly engaging narratives.

Adaptive Workflows for XR Journalism: Integrating AI and Volumetric Video on Mobile

Volumetric video (VV) offers a compelling medium for immersive journalism, enabling three-dimensional representations of people and events that can be experienced spatially through extended reality (XR). Despite growing interest in VV for audience-centered storytelling, practical deployment, particularly on mobile platforms, remains constrained by complex pipelines and limited automation support. This work presents a mobile XR demonstrator that enables users to browse a VV gallery and instantiate selected assets into their physical environment using a marker-based augmented reality interface.

The system architecture combines Unity-based development with a backend automation layer powered by n8n, a low-code workflow orchestration tool. Drawing from established practices in creative technology pipelines, the implementation automates VV asset management, metadata parsing, cloud delivery, and event-based triggers with minimal manual intervention. The workflow supports adaptive content integration and provides a modular structure for future extensibility.

This prototype contributes to the TRANSMIXR project’s objectives by demonstrating how AI-assisted workflows can bridge broadcast media and immersive mobile experiences. The system facilitates scalable, low-overhead deployment of VV content for XR journalism, emphasizing accessibility, configurability, and cross-platform compatibility. Preliminary evaluations focus on pipeline efficiency, response latency, and integration robustness. These findings support ongoing efforts to make immersive storytelling more operationally viable for journalistic applications and inform future research in automation for XR content delivery.

Shoot once, frame later with Dynamic Radiance Fields from multi-view videos

What if we left camera framing for the post-processing stage? Movie directors may shoot the same scene multiple times to try different camera frames, but this can be costly and not always reproducible (i.e. explosions, emotional acting). In an ideal world, the scene is shot once and the camera frame is chosen later. Learning a Dynamic Radiance Field of the scene from multiple fixed-view-point cameras allows us to achieve this. Come and see how we turn multi-view videos of a scene into a Dynamic Radiance Field to achieve cool effects like novel view synthesis, slow motion, and more!

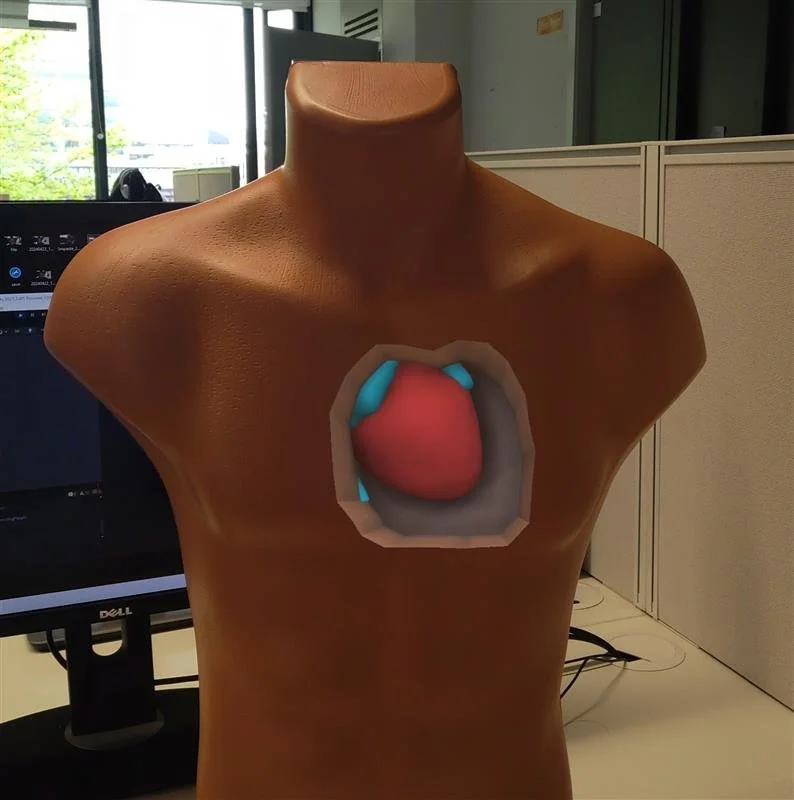

Tangible Interactions in Mixed Reality

We present a demonstration of our research on using tangible interfaces for intuitively authoring and visualizing 3D structures in Mixed Reality (MR). Visually embedding internal models into real objects is an approach that is commonly used for explanatory and instructional visualizations in domains such as anatomy, engineering, and geosciences. However, such mixed reality visualizations often suffer from spatial ambiguity, and can be difficult to create without technical proficiency in 3D modeling or graphical programming. To address these issues, we propose an approach that leverages tangible interaction, allowing the user to physically touch, hold, and feel physical objects attached to elements of the mixed reality setting. We believe that such an approach eases the modeling process, increases interaction accuracy and enhances understanding of the relative 3D spatial positions of objects.

Echoes of the Creator: Immersive Co-creative Storytelling in XR

Join Yifan Chen in an interactive demonstration of Echoes of the Creator, an immersive VR experience where participants engage in co-creative world-building. In this environment, users alternate between a “God” perspective—manipulating and arranging architectural blocks—and a “Mortal” perspective, experiencing the scaled-up creations in detail. Throughout the journey, participants interact with two AI agents, Nebu and Echo: one providing professional architectural guidance, the other embodying human imagination. Through dynamic spatial storytelling, AI mediation, and multimodal interaction, the demo explores how immersive storytelling fosters engagement, collaboration, and creativity in shared virtual spaces. Participants will experience firsthand how AI-driven characters and interactive design elements enhance narrative immersion and co-creative reflection.

3D Human and Cloth Reconstruction

Have you ever imagined creating your own photorealistic 3D clothing from just images? This work takes images of a person as input and generates both photorealistic 3D clothing and the underlayer 3D human model. It uses Gaussian Splatting, a state-of-the-art rendering technique, as the rendering representation and generates explicit 3D meshes that are ready for simulation in 3D virtual try-on applications.

Becoming a Gorilla: Embodying the Primal Presence in VR

What if you could step into the body of another creature? In this hands-on VR demonstration, you’ll embody a gorilla. Your movements are mapped in real time to a life-sized gorilla avatar, letting you explore how it feels to move, reach, and interact in a completely different body.

This work is part of RADICal, a SFI–funded project that is rethinking how actors and filmmakers approach motion capture. By studying how people adapt to embodying virtual bodies (human or non-human) we aim to develop techniques that make virtual performances more natural and believable. Our research combines computer graphics, motion tracking, and neuroscience to investigate how strongly people feel ownership over their virtual body and what factors enhance this illusion.

Behind the Window: Data Privacy in a Virtual World

As Extended Reality (XR) technologies become more prevalent, so too do concerns about user privacy. In order to function correctly, these devices collect vast amounts of data, from explicit user input to subtle nonverbal cues. This data, especially when combined, can reveal sensitive information about a user, raising concern about privacy, consent, and potential manipulation. At the same time, businesses motivated by profit may be incentivised to push ethical boundaries in order to maximise gain. As former Google CEO Eric Schmidt once remarked, the strategy is often to operate “right up to the creepy line.” This session explores what happens when XR technologies cross that line, and why it matters for all of us as we step into immersive digital worlds.

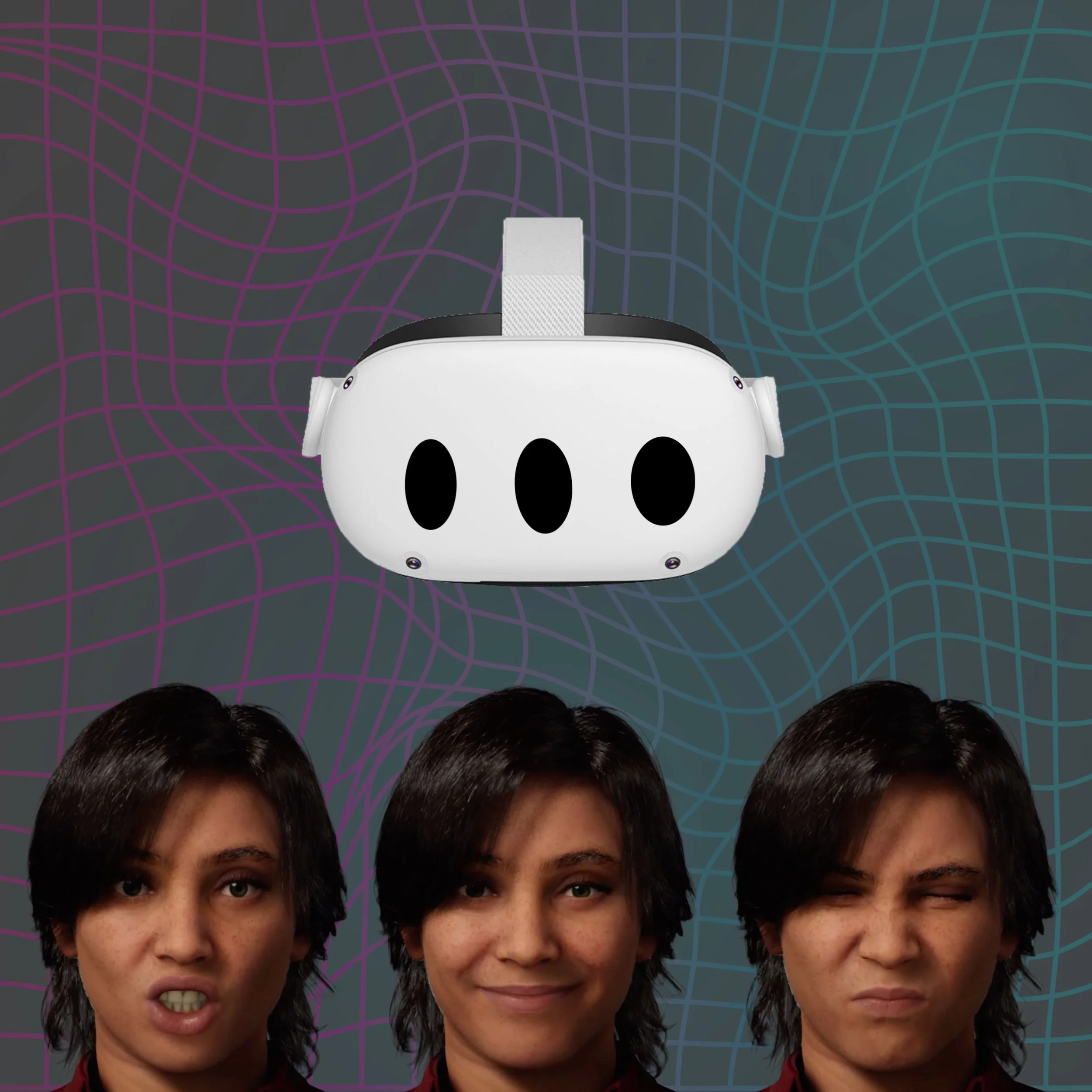

AI Driven Avatars in Extended Realities

With AI animation on the rise, this research aims to explore the different means that exist and to create a workflow that best combines AI with traditional methods. To what extent is the user immersed, how do these avatars impact on the user’s presence and how emotionally engaging is the relationship between the digital actor in the virtual space and the you in the physical world are some of the questions that will be looked into!

Immersive Portfolio: Showcasing Creative Work in Virtual Reality

We present a demonstration of an immersive virtual portfolio, with applications for 3D artists or game designers. Traditional portfolios often rely on static images or videos, which limit the ability to fully communicate spatial, interactive, and experiential aspects of creative work. To address these limitations, we explore the transition from 2D to 3D, with an approach that leverages Virtual Reality to create a navigable virtual environment where projects can be represented as an interactive 3D object or space.

Exploring the boundary of the Uncanny Valley through embodiment

Have you ever felt uncomfortable when looking at a video game character because it seemed too realistic, or had proportions that felt “off”? This phenomenon is called the Uncanny Valley. But what happens if we actually embody such a character? Could it change the way we perceive it? In this demonstration, you will embody different avatars: highly realistic faces, low-resolution faces, a humanoid with monstrous features, and a stylized cartoon-like human. The goal is to investigate how appearance influences perception when experienced through embodiment, and to better understand the sense of eeriness triggered by the Uncanny Valley."

Logaculture – Battle of the Boyne Augmented Reality Experience

The Logaculture – Battle of the Boyne Augmented Reality Experience is an immersive audio-augmented reality intervention designed to deepen visitors’ understanding of one of Ireland’s most significant historical sites. Through the use of location-based AR and spatial audio, visitors are invited to explore the grounds of the Battle of the Boyne while encountering layered narratives, reconstructed soundscapes, and historically informed perspectives. The experience connects cultural heritage with contemporary digital storytelling by situating visitors directly within the landscapes of history.

The Living Laboratory: Designing Tomorrow’s Plant Technologies

What if plants became the technologies of the future? In this interactive demonstration, participants will step into a world where vegetation evolves new abilities—turning into living archives, symbiotic partners, or even tools for communication. Each person begins by choosing a plant, then draws cards that reveal its speculative future: a new ability, a form of human–plant interaction, and a stakeholder who uses it. Guided by the plant’s own biosignals, transformed into live generative music, participants will use OpenBrush in virtual reality to sketch these future concepts and stories. This living laboratory invites us to rethink our relationship with nature and wonder: what futures might we grow together?